Podcast: Play in new window | Download | Embed

LM101-032: How To Build a Support Vector Machine to Classify Patterns

Episode Summary:

In this 32nd episode of Learning Machines 101, we introduce the concept of a Support Vector Machine. We explain how to estimate the parameters of such machines to classify a pattern vector as a member of one of two categories as well as identify special pattern vectors called “support vectors” which are important for characterizing the Support Vector Machine decision boundary. The relationship of Support Vector Machine parameter estimation and logistic regression parameter estimation is also discussed.

Show Notes:

Hello everyone! Welcome to the thirtieth-second podcast in the podcast series Learning Machines 101. In this series of podcasts my goal is to discuss important concepts of artificial intelligence and machine learning in hopefully an entertaining and educational manner.

I have had several requests for a discussion on support vector machines including requests by my listeners Shaurabh and Sean. If you would like to suggest a topic for me to discuss, feel free to submit your request by updating your profile when you subscribe to learning machines 101 at our website: www.learningmachines101.com . Or alternatively, you can submit your requests by visiting the “Statistical Machine Learning Group” on LinkedIn.

Support vector machines are a widely used class of pattern recognition machines. Although the original concept was first introduced into the literature in 1963 by Vapnik and Chervonenkis. It was not until the mid-1990s that the modern version of the support vector machine concept was published. The Support Vector Machine may be viewed as an extension of the theory of the Perceptron which was described in Episode 15.

In Episode 14, we discussed the concept of a McCulloch-Pitts formal neuron. The modern version of the McCulloch-Pitts formal neuron which is also known as a Threshold Logic Unit (TLU) can be used as a pattern classification machine. We represent a pattern of information as a list of numbers. For example, the pattern of information might be a list of 100 numbers corresponding to measurements of the heat index, time-of-year, rain-fall, humidity over the past five days. Given this list of 100 numbers which we will call an “input pattern vector” of dimension 100, we want to use the TLU to predict whether it will rain tomorrow or whether it will not rain tomorrow. For a 100-dimensional input pattern vector, the TLU has 100 and 1 free parameters. The TLU computes a weighted sum of each element of the input pattern vector and then adds an additional number to that weighted sum. That additional number is called the “intercept” in the statistics literature and is called the “bias” in the artificial neural network literature. The weighted sum plus the intercept is called the “net input”. If the “net input” is greater than zero, then the input pattern is classified as a pattern of measurements that predicts it will rain tomorrow. If the “net input” is less than zero, then input pattern is classified as a pattern of measurements that predicts it will not rain tomorrow.

Let’s go through the math calculations again just to clarify the calculations. Suppose now we had an input pattern vector with only two elements. Then our classification algorithm works as follows. First, the first element of the input pattern is multiplied by parameter value 1 and then added to the second element of the input pattern multiplied by parameter value 2 and then parameter value 3 (the intercept parameter value) is added to obtain the net input. Second, if the net input is greater than zero, the output of the TLU is a +1. If the net input is less than zero, then the output of the TLU is -1. Thus, the TLU classifies an input pattern into a +1 category or a -1 category. This TLU which processes two-dimensional patterns has a two-dimensional weight vector and a scalar bias. The concatenation of the weight vector and bias is called the parameter vector for the TLU.

Finally, note that the McCulloch-Pitt formal neuron generates a +1 for the first category and a 0 for the second category rather than +1 and -1 but this is a relatively minor technical difference. However, in different applications, this minor technical difference could have a differential impact on performance.

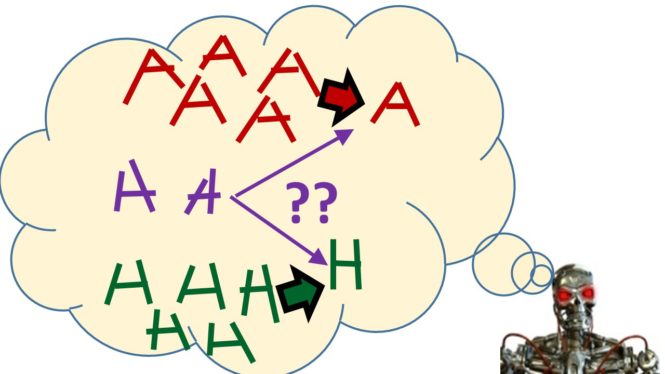

So the classification component of a support vector machine is literally equivalent to the behavior of a McCulloch-Pitts formal neuron or a TLU. The terminology Support Vector Machine is derived from the method used to estimate or learn the free parameters of the Support Vector Machine or SVM. This method is a supervised learning algorithm method which means that our training data consists of a collection of N input patterns. In addition, our training data includes a number which is either -1 or +1 indicating the category assignment for each of the N input patterns. Assume that the total number of possible input patterns is much larger. For example, the total number of possible input patterns might be M where M is much larger than N. In other words, suppose our training data consisted of N 100-dimensional pattern vectors and their category memberships. Assume that each pattern vector’s element is binary-valued and can take on the value of either zero or one. Now the number of training patterns might be quite large. For example, N might be equal to 1 million indicating there are 1 million training patterns and the category membership for each of these 1 million training patterns has been provided. On the other hand, even for this overly restrictive case where each pattern vector’s element is binary-valued, it follows that M = 2100 which means that there are trillions of millions of possible pattern vectors. We want our SVM to correctly classify these trillions of millions of possible pattern vectors which it has never seen in the training data.

Note that the set of all possible input patterns can be divided into three distinct non-overlapping sets for a given TLU parameter vector. First, we have the set of input patterns which generate a net input which is positive and thus a TLU output of +1. Second, we have the set of input patterns which generate a net input which is negative and thus a TLU output which is -1. And third, we have the set of input patterns which generate a net input which is exactly equal to zero. This third set of input patterns are “ambiguous”. The TLU doesn’t know whether to classify them as belonging to +1 category or as belonging to the -1 category. So what we would like to do is figure out a way to somehow reduce the chances that an observed input pattern will belong to this ambiguous category. If we can do that, then we would hope that the classification performance of the TLU would be more robust. Note that the set of patterns in the “ambiguous category” can be interpreted as defining a “decision boundary” in a multi-dimensional hyperspace!!

The SVM approach approaches the problem of parameter estimation and learning for a TLU in the following manner. Note that the set of training data does not contain any ambiguous training patterns, so the SVM parameter vector is first adjusted to correctly classify all of the training patterns (if this is possible). Then it might be the case that the SVM parameter vector could be perturbed or altered without resulting in an incorrect classification. So there might be many thousands or millions of different choices for the SVM parameter vector. All of these choices for the SVM parameter vector will correctly classify the N training stimuli! So which of these millions of different choices for the SVM parameter vector should we choose?

Let’s quickly review the concept of a vector magnitude. A vector is a list of numbers. To compute the magnitude of a vector we simply square each of the elements of the vector and add up the squares of all vector elements. We then take the square root of the sum of the squares of the vector’s elements and that is called the vector’s magnitude.

If we have two vectors we can compute the “distance” between two vectors called Vector 1 and Vector 2 by the following procedure. First, subtract the first element of vector 1 from the first element of vector 2 and place that difference in the first element of a “distance vector”. Then subtract the second element of vector 1 from the second element of vector 2 and place that difference in the second element of the distance vector. Continue until we have computed the distance vector. The vector magnitude of the distance vector is defined as the Euclidean distance between Vector 1 and Vector 2.

The SVM answer is as follows. Compute the “distance” between the set of input patterns which we would like the SVM to classify as -1 and the set of input patterns which we would like the SVM to classify as +1. This “distance” will be different choices of parameter vectors. We want to pick a parameter vector which not only correctly classifies all of the unambiguous input patterns in the training data set but, in addition, minimizes the distance between input patterns classified as -1 and the input patterns classified as +1. How do we measure the distance between these two sets of input patterns? The distance is measured by picking one input pattern from the set of input patterns which should be classified as +1 and one input pattern from the set of patterns which should be classified as -1 and computing the Euclidean distance for that particular pair of input vectors. We then repeat this for all possible pairs in the training data so if we have 500 pattern vectors which are classified as +1 and we have 600 pattern vectors which are classified as -1, then we compute the distances for the 500 multiplied by 600 possible pairs and define the “smallest distance” as the distance between the two sets of input pattern vectors.

A mathematical analysis of this situation shows a very interesting result. If it is possible to find a group of parameter vectors which correctly classify all of the training patterns, then the parameter vectors in that group of parameter vectors which MAXIMIZE the Euclidean distance between the set of input patterns classified as +1 and the set of input patterns classified as -1 are precisely the parameter vectors whose weight vectors have the smallest magnitudes.

So this suggests that our SVM learning algorithm should work as follows. Given a collection of training data, find the TLU parameter vector which has the smallest weight vector magnitude subject to the constraint that the TLU parameter vector correctly classifies all of the training pattern vectors by making the net input for each pattern vector is either greater than +1 or less than -1. This is called a constrained optimization problem since we are trying to minimize a function subject to a particular set of constraints.

To keep things simple, let’s consider a much simpler constrained optimization problem. Suppose we have a collection of rectangles and we want to find the rectangle with the smallest area subject to the constraint that the sum of the sides of the rectangle is equal to four. Notice that the constraint is really important because the rectangle with the smallest area without the constraint is simply a teeny-tiny rectangle whose area is very close to zero. When we introduce the constraint that the sum of the sides of the rectangle must equal four, then this makes the problem more interesting. The solution to this problem can be solved using the method of Lagrange Multipliers which basically works by defining an objective function called the Lagrangian. For this example, the Lagrangian objective function takes two numbers corresponding to the width and length of a rectangle and a third number called the Lagrange multiplier and maps those three numbers into an output number. In particular, this Lagrangian has two terms. The first term is the product of the width and length of the rectangle and then that is added to the Langrage Multiplier multiplied by the rectangle perimeter minus four where the rectangle perimeter is simply equal to twice the rectangle width plus twice the rectangle length. The first term specifies that we want to minimize the area of the rectangle, while the second term of the Lagrangian specifies that we want to do this minimization subject to the constraint that the perimeter length is equal to four.

The main theoretical result for Lagrange multipliers is that if we want to find the rectangle with the smallest area subject to the constraint that the sum of the sides of the rectangle is equal to four we can solve this problem by looking at the critical points of the Lagrangian. One of these critical points will be our solution to the constrained optimization problem. Note that the set of critical points of a function is obtained by taking the derivative of the function and setting that derivative equal to zero. A vector that makes the derivative of the Lagrangian equal to zero is called a critical points. Some of these critical points might be minimizers of the Lagrangian but often the critical points of interest are not minimizers they are instead saddlepoints of the Lagrangian. Notice that a critical point of the Lagrangian includes a value for the Lagrange multiplier. For more complicated problems with multiple constraints, there is a different Lagrange multiplier for each constraint.

Ok…so now let’s return to our original SVM problem we have decided that our goal is to minimize the LTU weight vector magnitude subject to N constraints where each one of these N constraints corresponds to a particular input pattern in the training data set. This defines a Lagrangian. Now suppose we find an OPTIMAL parameter vector and set of OPTIMAL Lagrange multipliers that correspond to a critical point of the Lagrangian that solves our constrained optimization problem. Typically, most of the OPTIMAL Lagrange multipliers will be equal to zero because our optimization problem is focused upon minimizing the weight vector. This means that the training stimuli associated with the non-zero Lagrange multipliers were sufficient to define the original Lagrangian and we could have omitted the other training stimuli associated with the Lagrange multipliers with zero values. The few training stimuli associated with the non-zero Lagrange multipliers are called the SUPPORT VECTORS because they provide the critical information required to define the OPTIMAL parameter vector and hence the SVM decision boundary.

So this concludes our very quick introduction to the SVM concept in the case where it is possible to find a parameter vector which correctly classifies all of the input patterns in the training data set. In this situation where such a TLU parameter vector correctly classifies all input patterns in the training data set, we call the training data set LINEARLY SEPARABLE.

In the real world, of course, we often encounter training data sets which are not linearly separable. Fortunately, we can deal with such situations by making only a slight modification to the unconstrained SVM optimization problem for the linearly separable case.

The extended version of the SVM learning algorithm designed for the case where the training data are almost linearly separable is now defined. Suppose that a training stimulus is correctly classified as a +1 and the net input for the TLU is greater than +1. Or suppose the training stimulus is correctly classified as a -1 and the net input for the TLU is less than -1. When the set of training stimuli has these properties, we would like the constrained optimization problem to be equivalent to the constrained optimization problem we used for the linearly separable case. However, when the net input for a +1 category training stimulus is less than +1 then our goal will be to minimize a positive constant multiplied by the magnitude of the weight vector plus 1 minus the net input for the +1 category training stimulus. In other words, rather than just minimizing the weight magnitude one also minimizes the discrepancy between the net input and +1 when the net input for a +1 category training stimulus is strictly less than +1.

Incorporating these inequality constraints, one can construct a Lagrangian for the nonlinearly separable case and use the Lagrangian to not only identify an optimal parameter vector but additionally identify support vectors as in the linearly separable case. This new Lagrangrian will be called the soft margin SVM objective function. Another benefit of this analysis is that the Lagrange optimization problem can be formulated as a quadratic programming problem which can be solved in a computationally efficient manner. One can download software to solve quadratic programming problems or alternatively one can use Newton’s method to find a critical point of the Lagrangian. Newton’s method has a second-order convergence rate as opposed to standard gradient descent but has greater computational cost per iteration. A quick warning, however, don’t use standard gradient descent methods to find a critical point of the Lagrangian because typically the critical point of interest will be a saddle point in this situation. An appropriate way to use gradient descent is to do gradient descent on the parameter vector while doing gradient ascent on the Lagrange multiplier. A nice discussion of this issue can be found in the 1988 NIPS paper by John Platt and Alan Barr titled “Constrained Differential Optimization”.

Using some algebraic arguments, it is also possible to rewrite the SVM unconstrained optimization problem for the nonlinearly separable case as a different type of unconstrained optimization problem. Let the delta error penalty for a training stimulus be defined as 1 minus the desired response of the TLU multiplied by the net input to the TLU. The ERROR penalty for a training stimulus is equal to the DELTA penalty if the delta penalty is positive. If the delta penalty is negative, then the error penalty for that training stimulus is defined as zero. Now define the soft margin SVM objective function which maps a parameter vector into a number such that the objective function is defined as the magnitude of the weight vector component of the parameter vector squared multiplied by a positive number lambda plus the sum of the ERROR penalties for all of the training stimuli. A strict local minimizer of the soft margin SVM objective function with an appropriate choice of lambda corresponds to a solution to the SVM constrained optimization problem for the nonlinearly separable case. The constant lambda is typically chosen using cross-validation methods as described in Episode 28.

The SVM approach is designed to solve a binary classification problem. How does this compare with more classical methods of statistical data analysis? A standard method of statistical data analysis for the case where one wishes to predict the likelihood of a binary response variable given an input pattern is called “logistic regression”. Most statistical data analysis software packages include logistic regression. An alternative formulation of the SVM constrained optimization problem provides an important connection between the SVM approach and binary logistic regression. This alternative formulation also provides a method for semantically interpreting the LAMBDA constant for the SVM in the nonlinearly separable case.

Suppose we modify the soft margin SVM objective function so that the error penalty for a training stimulus is the natural logarithm of one plus the exponential function of the delta penalty. If one graphs this error penalty, it turns out that this is a “smoothed version” of the original soft margin SVM objective function which we will call the logistic regression SVM objective function. In fact, in the special case where the net input to the TLU is sufficiently greater than +1 when the input pattern belongs to the +1 category and the net input to the TLU is sufficiently less than -1 when the input pattern belongs to the -1 category, then the logistic regression SVM objective function is an adequate approximation for the soft margin SVM objective function. Using a smoothed soft margin SVM objective function one can use Newton’s method or a memoryless BFGS method with automatic stepsize selection for parameter estimation as described in Episode 16.

Now here is the surprise. After some algebra, one can show that the logistic regression SVM objective function is a MAP estimation function as described in Episode 26 where the log-likelihood function is specified by a logistic regression probability model which computes the probability the desired response is +1 given the input pattern and parameter vector and whose parameter prior is a Gaussian probability density with mean zero and variance equal to the reciprocal of Lambda multiplied by twice N. In other words, minimizing the smoothed soft margin SVM objective function is mathematically equivalent to MAP estimation of a logistic regression model with a Gaussian prior. For large sample size N, the effects of the Gaussian prior become negligible and the MAP estimation and ML estimation problems are asymptotically equivalent as discussed in Episode 26. This type of prior for logistic regression is referred to as “ridge regression” in the statistics literature.

Note that the actual formula used to calculate the probability of the response variable value is a little different than the conventional formulas used in logistic regression modeling because typically the binary response variable in logistic regression model takes on the values of one and zero rather than one and negative one. But regardless of the representation of the response variable, minimizing the smoothed soft margin SVM is mathematically equivalent to using MAP estimation for estimating the parameters of a logistic regression model. And for sufficiently large sample sizes, MAP estimation is asymptotically equivalent to maximum likelihood estimation which is the method used in standard statistical software packages.

The semantic interpretation of the logistic regression soft margin SVM as a statistical pattern recognition machine is very important and attractive. First, it permits the classification algorithm to be interpreted as an algorithm that minimizes the probability of classification error with respect to a particular probability that the desired response will take on the value of +1 given the input pattern and the parameter vector. Second, it provides a semantic interpretation of the constant LAMBDA used in the original soft margin SVM. Briefly, LAMBDA can be interpreted as the reciprocal of twice the sample size multiplied by the Gaussian parameter prior variance where the square root of the Gaussian parameter prior variance can be interpreted as the expected range of an element in the weight vector before the training data has been observed. Third, the logistic regression soft margin SVM provides an explicit prediction of the probability that a response of +1 or -1 will be observed given an input pattern. Such probabilistic confidence values are not available in the standard soft margin SVM. Fourth, the statistical interpretation allows one to design statistical tests and analyses to determine if the statistical pattern recognition machine is able to adequately represent its statistical environment. Statistical tests for the adequacy of a statistical model are not possible if the assumptions of the statistical model are not provided.

And fifth, notice that the soft margin SVM attempts to maximize the distance between the set of input patterns which should be classified as +1 and the set of input patterns which should be classified as -1. This maximization is only achieved by the logistic regression soft margin SVM when the logistic regression soft margin SVM is very confident that all of the +1 category input patterns in the training data belong to the +1 category and all of the -1 category input patterns in the training data belong to the -1 category. Although some might view this as a limitation of the logistic regression soft margin SVM, this limitation could also be viewed as an advantage of the logistic regression soft margin SVM over the standard soft margin SVM because the logistic regression soft margin SVM (unlike the standard soft margin SVM) essentially assigns a different confidence probability to the classification of each training stimulus and then combines this collection of confidence probabilities to find the maximally likely parameter values given the observed data. In other words, the logistic regression SVM uses its estimated probabilities of correct classification to differentially weight every training stimulus including training stimuli which have been very confidently classified. Whether or not this is a strength or weakness of the logistic regression modeling methodology relative to the SVM methodology simply depends upon the nature of the particular statistical environment within which the learning machine lives.

In summary, the classical SVM appears to be a useful tool for identifying a collection of support vectors. And, in the early stages of data analysis and especially for “big data” problems, the soft margin SVM might be more preferable than a logistic regression modeling approach because it identifies a collection of support vectors. Once the support vectors have been identified, those support vectors could be used to design preprocessing transformations when the decision boundary for separating the training data into two categories is highly nonlinear.

In fact, this is another important topic. In this podcast we covered the case where the training data could be divided into two categories and although we considered the case where the training data was not linearly separable…we implicitly assumed that the absence of linear separability was due to a few outliers. There are some problems which are fundamentally not linearly separable and require a nonlinear preprocessing transformation to transform them into a linearly separable problem. In fact, the original concept of a Perceptron as proposed by Rosenblatt recognized the importance of such nonlinear preprocessing transformations. Such nonlinear preprocessing transformations can be either chosen by the design engineer, or they can be estimated using methods such as the Deep Learning methods described in Episode 23 and Episode 29 and Episode 30.

But there is another approach to designing nonlinear preprocessing transformations which is based upon specifying similarity relations. In fact, the similarity relations themselves can be used in certain cases to implicitly implement nonlinear preprocessing transformations. This is called the “kernel trick” which is often presented in the context of Support Vector Machines but, in fact, the kernel trick concept is much more general and thus applicable to many other machine learning algorithms as well. We will have another episode in the future which just focuses on the “kernel trick”.

Thank you again for listening to this episode of Learning Machines 101! I would like to remind you also that if you are a member of the Learning Machines 101 community, please update your user profile and let me know what topics you would like me to cover in this podcast.

You can update your user profile when you receive the email newsletter by simply clicking on the: “Let us know what you want to hear” link!

If you are not a member of the Learning Machines 101 community, you can join the community by visiting our website at: www.learningmachines101.com and you will have the opportunity to update your user profile at that time. You can also post requests for specific topics or comments about the show in the Statistical Machine Learning Forum on Linked In.

From time to time, I will review the profiles of members of the Learning Machines 101 community and comments posted in the Statistical Machine Learning Forum on Linked In and do my best to talk about topics of interest to the members of this group!

And don’t forget to follow us on TWITTER. The twitter handle for Learning Machines 101 is “lm101talk”!

And finally, I noticed I have been getting some nice reviews on ITUNES. Thank you so much. Your feedback and encouragement is greatly valued!

Keywords: Support vector machine, logistic regression, ridge regression, MAP estimation, McCulloch-Pitts formal neuron, Threshold Logic Unit, Lagrange Multipliers, constrained optimization

Further Reading:

Boser, Guyon, and Vapnik (1992). A Training Algorithm for Optimal Margin Classifiers

(first publication of modern SVM concepts)

Rosenblatt (1962). Principles of Neurodynamics : Perceptrons and the Theory of Brain Mechanisms

(compare concepts here to SVM paper!!)

Wikipedia Article on “Support Vector Machine”

Wikipedia Article on “Lagrange Multipliers”

Platt and Barr (1988). Constrained Differential Optimization in Proceedings of the 1987 NIPS Conference

Related Learning Machines 101 Episodes:

Episode 14, Episode 15, Episode 16, Episode 23 , Episode 26, Episode 29, and Episode 30

Copyright © 2015 by Richard M. Golden. All rights reserved.