Podcast: Play in new window | Download | Embed

LM101-074: How to Represent Knowledge using Logical Rules (remix)

Episode Summary:

In this episode we will learn how to use “rules” to represent knowledge. We discuss how this works in practice and we explain how these ideas are implemented in a special architecture called the production system. The challenges of representing knowledge using rules are also discussed. Specifically, these challenges include: issues of feature representation, having an adequate number of rules, obtaining rules that are not inconsistent, and having rules that handle special cases and situations.

Show Notes:

Hello everyone! Welcome to the 74th podcast in the podcast series Learning Machines 101. This episode is a remix of Episode 3 in this podcast series. In this series of podcasts my goal is to discuss important concepts of artificial intelligence and machine learning in hopefully an entertaining and educational manner. In this episode we focus on the concept of using “rules” to represent knowledge. We discuss how this works in practice and we explain how these ideas are implemented in a special architecture called the production system.

How can we represent the “knowledge” in a satisfactory manner? Suppose I have some piece of knowledge such as: Birds can fly. Knowledge of this type can often be represented in terms of IF-THEN rules. So, for example, we can rewrite the assertion Birds can fly as a logical IF-THEN rule. Specifically, IF something is a BIRD, THEN that something can FLY.

Such IF-THEN rules can also be used to guide behavior as well. For example, suppose we want to represent knowledge that if you are hungry you can go to the store or go to a restaurant. We might then propose the logical rule:

IF I am hungry AND I have money, THEN EITHER go to the store OR go to a restaurant.

A learning machine that has knowledge of checkers could represent its current state of knowledge using a collection of IF-THEN logical rules. For a learning machine that can play checkers there might be two basic types of IF-THEN rules that are stored in its knowledge base. The first type of IF-THEN rules would describe the “legal moves” in the game of checkers or in other words these rules describe how the checkers world works.

For the checkerboard playing problem, examples of rules how the World works might be:

(1) IF it is your turn to make a move, THEN you can only move one checker piece.

(2) IF your opponent can’t make a move, THEN you win the checkerboard game.

(3) IF it is your turn to move and you are not moving a KING, THEN you can only

move your checker pieces in a forward-diagonal direction towards your opponent.

These are rules specifying how the world works as opposed to rules that specify STRATEGY.

Here are some examples of Strategy rules for checker playing problems.

(1) IF you have an opportunity to make a checker piece a KING, THEN make a checker piece a KING.

(2) IF you have the opportunity to capture an opponent’s piece, THEN capture the piece.

(3) IF your KING is near the edge of the checkerboard, THEN move your KING in the

direction towards the center of the checkerboard.

Given this approach, it seems reasonable that we could represent the knowledge in terms of rules. In fact, the method that the checker playing learning machine uses to make decisions is an example of what is called a Production System in artificial intelligence.

The generic Production System consists of three key components:

(1) a large collection of rules representing the production system’s knowledge.

(2) a “working memory” which specifies the production system’s current internal

representation of the problem.

(3) a “conflict resolution” mechanism which helps the production system decide

which rule or which subsets of rules should be chosen.

Let’s discuss each of these components beginning with the working memory component.

We can think of the “working memory” as a large collection of features. The particular pattern of features corresponds to the production system’s internal representation of the problem it is trying to solve. In the checkerboard playing example, we might have a list of features such as: (1) number of pieces that the machine has, (2) number of playing pieces of the opponent, (3) number of kings the machine has, (4) the location of the kings on the playing board, and so on. This collection of features is an abstract representation of the “situation” depicted by the checkerboard. Notice that the choice of features is crucial. A checkerboard expert might have a really good set of features, while a checkerboard novice might have a really poor set of features.

Note that we are talking about a particular theory of representation when we discuss features. We are taking a region of space and time in the real world which involves this checkerboard situation where the pieces on the checkerboard specify the current state of the checkerboard game. You can take a photograph of this situation and that would be one way to capture and represent this checkerboard situation. However, the goal here is to capture and represent this checkerboard situation in a manner which supports inference and learning for a learning machine. This is typically done by a “mapping” which maps the current state of the checkerboard into a list of numbers which is called the feature vector. The feature vector is basically the list of features which we just discussed and contains information such as the number of kings the learning machine has on the board, the locations of those kings, the number of playing pieces the opponent has on the board and the locations of those playing pieces, and so on. A photograph representation of the checkerboard is great for memories but not as useful as a feature vector representation of the checkerboard if we want to implement a machine learning or inference algorithm.

Let’s now consider the rule component of a production system.

The IF component of each IF-THEN rule is always defined in terms of which features are present or absent in working memory. So, for example, if there is a feature in working memory which states that we are examining a checkerboard where you have an opportunity to make a checker piece a KING and in addition there is a rule which states:

IF you have an opportunity to make a checker piece a KING, THEN make it a KING.

The production system would deduce that the IF part of the IF-THEN rule was true and would then execute the THEN part of the IF-THEN rule. In such a situation we say the

IF-THEN production rule has fired. As a consequence of this firing of the production rule,

The machine moves the checker piece accordingly and also updates its internal representation of the checkerboard in its working memory.

When the opponent makes a move, this changes the physical checkerboard situation and the machine’s perceptual system which is presumably watching the physical checkerboard then modifies its internal working memory representation of the checkerboard.

This leads us to the third component which is called the “conflict resolution” component. This component basically makes a decision when the IF portion of multiple IF-THEN rules is true, whether it should allow ALL of those rules to fire or whether it should only allow a few of those rules to fire.

One approach is to allow only one rule at a time to fire. In such cases, the conflict resolution system must “prioritize” which rules seem to be most important at the time

and select the rule which has the “higher priority”. So, for example, suppose you had one rule in the checker playing machine which basically said: IF a king is near the edge of the board, then move the king towards the center. And you had another rule which said: If you move the King in this situation, then you will lose the game. So, in this scenario, we have two rules which are in conflict. One rule says move the king. The other rule says do not move the king. However, avoiding losing the game is a very high priority so the conflict resolution system should choose the rule which prevents the machine from losing the game.

In this way, we have a collection of rules and a mechanism for using those rules to interact with a physical environment. This type of approach has been applied in number of areas of artificial intelligence as well as in the development of formal models of human cognition. For several decades, John R. Anderson and his colleagues at Carnegie Mellon University have been developing a production system type approach to modeling human cognition which is called: “ACT-R”. Another classic production system is the SOAR production system cognitive architecture developed by Newell, Laird, and Rosenbloom also at Carnegie Mellon University. SOAR has been applied to modeling problems in artificial intelligence as well as problems in modeling human behavior.

The show notes provided at: www.learningmachines101.com provide some relevant references to the production system literature. However there are several important issues associated with production system methods for guiding artificial intelligence that need to be discussed. The first issue has already been mentioned. That is the problem of representation. We need to have the right features to represent a problem. If the features we are using do not adequately represent the situation we are considering, then regardless of how smart the system is or regardless of how many rules the system has, the system will not behave in an intelligent manner. So this is the first crucial point. Picking the right features for a problem is one of the greatest challenges in artificial intelligence. With the wrong representation, an artificial intelligence problem can be impossible to solve. With the right representation, an artificial intelligence problem can be easily solved. Picking the right features can be done by the “designer” of the artificially intelligent system. Or, in some cases, advanced learning capabilities can be provided to an artificially intelligent system so that it can actually learn its own features. However, we will need at least an entire episode or multiple episodes to explore this idea.

The second crucial issue is the problem of sufficient knowledge. Suppose we assume that we have the right set of features and suppose we have a large collection of rules. However, it is possible that even if we have thousands of rules for playing a game of checkers that this collection of rules will not yield a solution which will enable one to either win at the game of checkers.

In some cases we can actually build into the production system the capability of determining whether a solution (e.g., a way of winning a checkers game) exists by checking if the collection of rules is SUFFICIENT for computing such a solution (although the ability to do this in all situations is not necessarily guaranteed).

One way to visualize this problem is to imagine that you are trying to put together a jigsaw puzzle. Each jigsaw puzzle piece corresponds to an IF-THEN rule. The IF part of the

puzzle piece needs to “match” the current state of working memory. The current state of working memory is the partially completed jigsaw puzzle on your living room floor. If you

complete the entire jigsaw puzzle, then this corresponds to the case where you have won the game of checkers. However, suppose that some of the important jigsaw puzzle pieces are missing from the box but you don’t know they are missing when you start your jigsaw puzzle project. You think that there should be enough jigsaw puzzle pieces in the box to complete the jigsaw puzzle…but (in reality) there are not.

No matter how smart you are at putting together jigsaw puzzles, you still will not be able to complete the jigsaw puzzle. This is analogous to having a large number of IF-THEN rules representing knowledge about a domain. It might appear that you have enough knowledge to solve the problem of interest but it might be the case that some number of IF-THEN rules are missing which will prevent you from solving the problem.

The third crucial issue is the problem of inconsistent knowledge. Suppose that we are loading up an machine with knowledge but without realizing it, we have loaded up the machine with some contradictory knowledge. For example, we might have one rule which states that in situation X you should move your KING towards the center and also have another rule which states that in Situation X you should move your KING away from the center. This is similar but different from the problem of “conflict resolution” because when we perform the conflict resolution strategy we assume that there exists a resolution to the conflict. In this case, we have a conflict but there is no resolution.

Using our puzzle solving analogy, this corresponds to the case where the puzzle you are trying to solve includes inconsistent puzzle pieces. If you use one of these inconsistent puzzle pieces, then the other inconsistent puzzle piece can not be used. Basically, this corresponds to the case where someone has sabotaged your jig saw puzzle and replaced one of the pieces with another pieces which prevents you from ever completing the puzzle. However, even though you can’t complete the puzzle you can still measure your progress by counting the number of pieces left-over. The optimal solution to the “conflict resolution” problem is then reformulated by replacing the goal of “completing the jig saw puzzle” with the revised goal of “putting the pieces together in the jig saw puzzle such that the number of remaining unused pieces is as small as possible.”

The fourth crucial problem is that it is hard to represent information in the real world using logical rules. In the real world, there are usually always exceptions. It is hard to capture knowledge using rules.

What are the rules that you use to go shopping at the store?

IF you want milk, THEN go to the store.

IF you are at the store and want milk, THEN take the milk off the shelf.

IF you want milk and you are at the store and have milk in your hands, THEN go to the cashier.

IF you want milk and you are at the store and have milk in your hands and you are at the cashier, THEN pay the cashier.

This seems reasonable at first but what if: (1) The store is out of milk, (2) You left your wallet at home?

Here is another example.

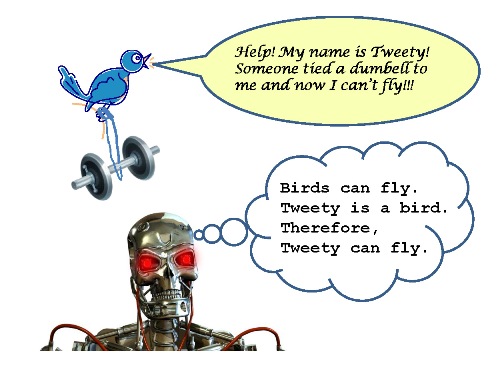

Suppose we have a rule that: “IF a living thing is a bird, THEN it can fly.” This seems like a reasonable rule but what if the bird is a penguin or ostrich?

Or suppose the bird is a canary but it’s feet are in concrete? Or perhaps the bird has an injured wing?

And finally, a third example. How could we define a car?

IF an object is a car, THEN the car travels on the ground and transports people or things.

But what if the car is a “flying car” or “swimming car” which can also fly in the air or swim in the water? Would we still call this a car? What about a plastic toy car you can put in your pocket?

Virtually any concept that we can imagine and try to define using rules will have its exceptions. This is a more fundamental problem which goes beyond the concepts of having a consistent collection of rules or a sufficient collection of rules. It is, in some sense, a fundamental problem with the entire idea of trying to represent any specific piece of knowledge as a logical IF-THEN rule.

Nevertheless, rule-based systems are widely used in the field of artificial intelligence and a critical challenge in artificial intelligence involves trying to represent the world in terms of rules.

Thank you again for listening to this episode of Learning Machines 101! I would like to remind you also that if you are a member of the Learning Machines 101 community, please update your user profile and let me know what topics you would like me to cover in this podcast. You can update your user profile when you receive the email newsletter by simply clicking on the: “Let us know what you want to hear” link!

If you are not a member of the Learning Machines 101 community, you can join the community by visiting our website at: www.learningmachines101.com and you will have the opportunity to update your user profile at that time. You can also post requests for specific topics or comments about the show in the Statistical Machine Learning Forum on Linked In.

From time to time, I will review the profiles of members of the Learning Machines 101 community and comments posted in the Statistical Machine Learning Forum on Linked In and do my best to talk about topics of interest to the members of this group!

And don’t forget to follow us on TWITTER. The twitter handle for Learning Machines 101 is “lm101talk”!

Also please visit us on ITUNES and leave a review. You can do this by going to the website: www.learningmachines101.com and then clicking on the ITUNES icon. This will be very helpful to this podcast! Thank you so much. Your feedback and encouragement are greatly valued!

Keywords: Features, Logical Rules, Production Systems

Further Reading:

- The Wikipedia article “Production System”

http://en.wikipedia.org/wiki/Production_system provides a useful review of production systems as well as references to the SOAR production system.

- The book “Artificial Intelligence: Foundations of Computational Agents” by Poole and Mackworth (2010) (Cambridge University Press) is freely

available (can be downloaded for free at: http://artint.info/html/ArtInt.html). This book has discussions of “heuristic search” and “features” as well as other relevant topics.

- Nils Nilsson’s book The Quest for Artificial Intelligence discusses the concept of Production System as well as the SOAR and ACT-R production systems on pages 467-474.

(Nilsson, 2010, Cambridge University Press).This book is in the “reading list” on the website: www.learningmachines101.com

- Also take a look at the book by John Laird entitled

The Soar Cognitive Architecture (MIT Press, 2012) which is a production system designed for both artificial intelligence and the development of formal models of cognition!

- Check out the SOAR home page at: http://sitemaker.umich.edu/soar/home to learn more about the SOAR project!!!!

- Also check out John R. Anderson’s home page at: http://act-r.psy.cmu.edu/ which describes the ACT-R “cognitive architecture” implemented as a production system for specifying and testing theories of human cognition.

Copyright Notice:

Copyright © 2014-2018 by Richard M. Golden. All rights reserved.