Podcast: Play in new window | Download | Embed

Episode Summary:

In this episode, we address the important questions of “What is a neural network?” and

“What is a hot dog?” by discussing human brains, neural networks that learn to play Atari video games, and rat brain neural networks.

Show Notes:

Hello everyone! Welcome to the thirty-fifth podcast in the podcast series Learning Machines 101. In this series of podcasts my goal is to discuss important concepts of artificial intelligence and machine learning in hopefully an entertaining and educational manner.

In this episode, we address the important questions of “What is a neural network?” and

“What is a hot dog?” by discussing human brains, neural networks that learn to play Atari video games, and rat brain neural networks.

You may have heard the terminology “neural network” in the news. What is a “neural network”? A biologist might explain a neural network in the following way. The human brain consists of brain cells which are called “neurons”. A neuron consists of thin fiberlike dendrites which carry information into the brain cell’s body in the form of electrical currents. The sum of these electrical currents is transformed by a variety of chemical and electrical mechanisms as well as the physical shape of the neuron into a voltage potential across the cell membrane. When the voltage across the cell membrane reaches a critical value, a voltage spike called the “action potential” occurs which is effectively propagated down the axon of the neuron which can be considered to be the neuron’s output. The axon of the neuron connects via “synaptic junctions” to the dendritic inputs of other neurons and initiates current flow in the neurons to which it connects. This is what a biologist or neuroscientist would call a “neural pathway” or a “biological neural network”.

In 1943, McCulloch and Pitts hypothesized that an abstraction of reality of this system could be mathematically represented as a device which computes the sum of its inputs and generates a 1 if the sum of its inputs is greater than a threshold and generates a 0 otherwise. They then proved that networks of such devices could implement any arbitrary logical function. In fact, they had developed the concept of a “logic gate” in their attempt to develop a mathematical model of a neuron and their work was very influential in the development and documentation of how modern digital computers work. Unfortunately, it turned out by the 1970’s that this abstraction of reality was simply wrong. It is computationally powerful and is the basis of all digital computer technology, it is inspired by the brain, yet it was ultimately determined that the biological details of the original model were incorrect.

The relevant informational feature in brains turned out to be the “firing frequency” of voltage spikes generated from a neuron rather than the presence or absence of a voltage spike generated from a neuron. For example, increased sensory stimulation can be shown to systematically increase the firing frequencies of sensory neurons while increased firing frequencies in motor neurons have been shown to evoke motor responses. In fact, the first mathematical neural network model which successfully explained neurophysiological data modeled how visual information was integrated in the eye of a horseshoe crab. Hartline and Ratliff showed that the integration of visual information in the horseshoe crab eye could be quantitatively modeled in great detail using a linear system. For his work in this area of neuroscience, Hartline with his colleagues Grant and Wald received the Nobel Prize in 1967. Thus, just because an abstract model of the brain “works great” (e.g., digital logic gates work great) doesn’t mean that the abstract model of the brain is actually instantiated in a biological nervous system. The criteria for a good model of the brain will be different for different investigators.

Let’s now turn to another abstract model of the brain which was described in a recent article published in the International Weekly Journal Nature titled “Human-level control through deep reinforcement learning” (for more technical details see the NIPS 2013 Deep Learning Workshop paper http://arxiv.org/abs/1312.5602). The Nature and NIPS paper describe a “deep neural network” which learned to play 49 different types of ATARI video games such as “pong” and “space invaders” without supervision. If you go to the show notes, I have provided links to some videos which show how the deep neural network learns from experience to play the ATARI video games: “pong” ,“seaquest” , “breakout” and “space invaders”.

Although a great many researchers have contributed to the development of this system, the authors of the 2013 Neural Information Processing Systems paper which is the basis for the discussion here are Mnih, Kavukcuoglu, Silver, Graves, Antonoglu, Wierstra, and Riedmiller. The “deep neural network” was simply presented with a video screen consisting of 210 rows of pixels with 160 pixels in each row and the pixels changed color at 60 times per second to display the video game action. At each time-step the deep neural network selects one of a fixed number of possible actions and also receives a number indicating the change in the game score. It is important to emphasize that the numerical game score reflects a summary of the system’s performance over time and should not be considered to be direct feedback regarding whether a particular action by the learning machine is appropriate. This type of learning problem where the learning machine does not receive feedback regarding the consequences of its current actions until sometime in the distant future was discussed in previous episodes of Learning Machines 101 (check out Episode 2 and Episode 25).

The details of the learning machine are provided in the NIPS 2013 Deep Learning Workshop Paper whose link is in the show notes. But briefly, a preprocessed version of several frames of the video image is processed by a feedforward convolutional neural network with three layers of hidden units similar to the architecture described in Episode 29. The output layer is a layer of output units where each output unit corresponds to a specific consequence of a particular action. These consequences are not immediate consequences but rather they are estimates of the expected average performance of the system into the distant future under the assumption that the learning machine’s future behavior is optimal. The learning machine’s strategy, therefore, is to typically select the action which maximizes its expected future performance but every so often the learning machine takes a random action in order to help the learning machine explore the consequences of novel actions. A “transition” consists of taking the last few preprocessed video game frames which we will call the initial frame sequence, generating an action, receiving a numerical change in the game score, and then observing the immediate consequences of that action which are expressed by a subsequent consequent frame sequence of a few preprocessed video game frames. A gradient descent algorithm is used to adjust the parameters of the learning machine by minimizing the sum-squared difference between the predicted consequence of an action and estimate of a weighted sum of future game score changes which is computed such that games score changes received in the very far distant future are weighted as less important. The weights are defined by a number called the “discount factor” which is between 0 and 1. Smaller values of the “discount factor” correspond to the case where game score changes in the distant future are considered to be less important. The weighted sum of future game score changes is estimated using the Q-learning method which works out after some algebra to be the change in the current game score change plus the discount factor multiplied by the network’s prediction of the consequence of its action based upon choosing a highly probable action given the consequent frame sequence and the network’s current parameter values. The technical details of the “Deep Q-Learning Algorithm” are provided in the NIPS 2013 Deep Learning Workshop paper. A review of Q-learning can also be found in the Wikipedia Article on Q-Learning or the original paper by Watkins and Dayan (1992) although the NIPS 2013 paper is reasonably clear as well.

Now this network is referred to as a “deep neural network” and it embodies a number of heuristics and methods which can be identified with how the brain processes information. The fact that it works using an extremely simple neurally plausible learning rule provides an existence proof that there exists a computer algorithm which can solve this hard learning problem by a relatively simple strategy of strengthening and weakening connections. Such an existence proof is sometimes referred to as a “computational sufficiency analysis”.

Note that this type of research is of great interest to engineers and computer scientists interested in solving problems in artificial intelligence and building intelligent systems. However, most neuroscientists would have difficulty in understanding the sense in which such a system makes explicit testable predictions in the field of neuroscience or adds to our understanding of neuroscience.

Certainly this type of research provides an example of a computational sufficiency analysis which states that given this particular representation of data and a network architecture with specific features, then there exists a network architecture which can solve some extremely hard problems in artificial intelligence. In other words, some neuroscientists are probably very excited about the performance of this network, while other neuroscientists would say that it has nothing to do with the brain or human information processing systems.

A “caricature” of a human face is not a photograph. It is an abstraction of reality which exaggerates some features at the expense of others. The point of a caricature might be to convey a particular emotion or intellectual state and emphasize the relationship of that emotion or intellectual state to the face. Or the caricature might exaggerate a physical feature

of the face emphasizing the physical distinctiveness of that face. In some sense, the caricature of a face has the potential to convey selected information about that face more clearly than a photograph and yet the caricature is not a realistic representation of a face. In the same sense, abstract computational models such as a deep learning network may provide interesting hypotheses about what types of minimal network architectures are sufficient to solve hard computational problems. These hypotheses, in turn, might inspire neuroscientists, psychologists, and cognitive scientists to experimentally test those hypotheses using behavioral studies and neurophysiological measurements. But that is not the goal of the “artists” who are creating the “deep net caricatures” of the brain, their goal is to solve problems in artificial intelligence! If their deep networks work better than any existing algorithm on the planet yet turn out to be incorrect models of the brain, the deep networks will still be used in artificial intelligence applications.

This is a fundamentally important point!!! So I will repeat this key concept. The success of “Deep learning networks” such as the “video game learning machine” is evaluated solely upon their ability to learn, generalize, and perform. It may be hoped that such networks will advance our knowledge and understanding of the mind and brain…and they might…or they might not. If they don’t advance our knowledge and understanding of the mind and brain, this is not a critical factor in evaluating their success.

You may have heard claims that a “neural network” of the type we have described here “approximates the brain”. This claim is both true and false in the same sense that we might say that a “caricature of a face” is a good approximation of the original face. Whether or not the caricature is a good approximation will depend in a large part to who is making that judgment and for what purposes. Every caricature of reality is fundamentally flawed but that is not a problem because a caricature of the brain is important in presenting a particular strong (and possibly wrong) claim about what features of the brain are important and relevant. This approach to making strong claims about reality and then attempting to falsify such claims is the basis of the scientific method. The claim that the deep learning ATARI neural network can not learn can be falsified by showing an example of learning in the ATARI neural network. However, specific claims about neurophysiological measurements can not be tested within such a framework without additional theory and simulations.

Now let’s consider a quite different example of an abstract neural network. In 2005, the Ecole Polytechnique Federale De Lausanne, a university in Geneva Switzerland, undertook an ambitious goal which is referred to as The Blue Brain Project. Check out the show notes for the website and the Blue Brain Video Introduction.They wanted to build a computer simulation of a biological brain on a supercomputer system. It is important to emphasize that the computational complexity of the abstract neurons used in the McCulloch-Pitts neural network or the Atari deep learning neural network is extremely low. To compute the output of a neuron with K inputs in these artificial neural networks requires on the order of K multiplications and K additions. A biologically realistic simulated neuron is enormously more complex and requires the equivalent of a laptop computer to properly simulate a single biologically realistic neuron. The shape and type of the neuron will generate different response characteristics and systems of nonlinear equations must be solved in an iterative manner in order to characterize how ionic currents flow and voltage potentials change. The project has successes in simulating a rat cortical column this is a neuronal network the size of a pinhead which consist of 10,000 neurons. A rat’s brain has about 100,000 of such columns which make up the rat’s cortex which is a sheet of neurons that plays a major role in the rat’s higher level cognitive and learning processes. Human cortex is organized similarly as a large sheet of such columns but human brain cortex may have as many as two million columns with 100,000 neurons in each column.

An important component of the Blue Brain project involving over 80 research centers across Europe is called the “Human Brain Project” which attempts to collect and integrate as much of our current knowledge of neuroscience and embody this knowledge as a detailed mathematical model of the brain. The ultimate goal of the Blue Brain Project is to reverse engineer the mammalian brain by creating detailed simulation models of both healthy and diseased brains with different levels of detail in different species.

It’s interesting to contrast the attitude of the researchers working on the deep learning neural networks in artificial intelligence with the attitude of the researchers working on the Blue Brain Project. The attitude of the Deep Learning neural network researchers is that by building machine learning algorithms inspired by abstract models of the brain one can develop computationally powerful solutions to problems in artificial intelligence. Ultimately, it is hoped, this will lead us to a deeper understanding of the mind and brain.

The attitude of the Blue Brain neural network researchers is that by building biologically realistic models of the brain one can integrate and advance our existing knowledge of biological neuroscience by instantiating this in a mathematical model which makes testable neurophysiological predictions. Ultimately, it is hoped that this will lead us to a deeper understanding of the mathematical basis of information processing principles in biological brains.

These two quite different groups of researchers are both interesting in simulating “neural networks” but their criteria for success is quite different. Thus, it is important to appreciate that the terminology “neural network” will have a fundamentally different meaning to different groups of researchers.

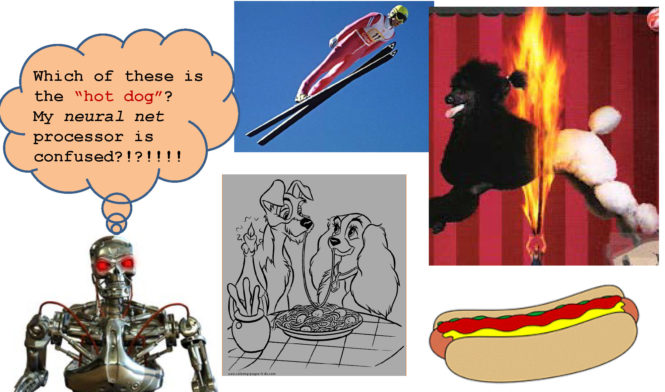

In the English Language, there is a phrase called “hot dog”. To a non-native speaker of the English language this seems like a rather odd phrase. Why is one talking about a canine who has a high temperature. Does the dog have a fever? Is the dog on fire? Or, the terminology “hot dog” may also be used to refer to a “skier” or “surfer” who performs dangerous acrobatic stunts. Or, the terminology “hot dog” could refer to a very good looking poodle which you would like to have your dog date! Or finally, the terminology “hot dog” might refer to a particular type of sausage served in a long roll where the long roll has been divided along its length. As you can see, the terminology “hot dog” will have a fundamentally different meaning in different contexts for different individuals.

Today, it is important to appreciate that the phrase “neural network” shares this same quality as well. The terminology “neural network” will have a fundamentally different meaning in different contexts for different individuals.

Based upon the previous discussion, its probably worth mentioning five popular definitions of the phrase “neural network”. This is not a complete list but it is at least a start.

- A feedforward network architectures with multiple layers where each layer involves a nonlinear transformation processing function is commonly assumed to refer to a neural network in the popular literature. Such architectures were discussed in Episode 15, Episode 20, Episode 23, and Episode 29.

- An actual biological network of biological neurons such as might be found in a human brain, dog brain, cat brain, rat brain, or mouse brain! Let’s not forget that the terminology “neural network” can refer to the biological neural pathways!

- A Machine Learning Algorithm whose architecture may be interpreted as inspired by brain structure but the success of the system is evaluated based upon its ability to solve hard problems in inference and learning. In other words, its computational performance is used as a measure of success rather than how effectively the machine accounts for biological data.

An example of this type of “neural network” is the ATARI video game learning machine. - A biologically realistic model of specific neural circuitry. This circuitry is not designed or intended to solve specific computations or solve specific problems in inference and learning. The success of this neural network is evaluated in terms of how well does it model specific neural circuits and how well can it make predictions of specific neurophysiological measurements in real brains. An example of this type of neural network is the neural network under construction at the Blue Brain Project.

- An abstract model of neural processing which illustrates how a computation might be accomplished but is not designed or intended to solve a hard problem in inference and/or learning. In addition, the model is not biologically realistic but has only a few qualitative properties of biological systems. Nevertheless, it is designed to provide an argument of computational sufficiency for a very large class of neural architectures. One example of such a network might be the Kohonen self-organizing neural network which consists of a group of hidden units whose inputs are connected to sensor units. Associated with each hidden unit and sensor unit is a numerical state which will be called the unit’s “activity level”.

Initially the input sensor units are randomly connected to the hidden units. In addition, each hidden unit is associated with a learning rule which tends to adjust the parameters of that hidden unit so that when it sees a particular sensory pattern of information that hidden unit becomes more activated in the future. In addition, there is a constraint in the system which forces neighboring hidden units to have similar response properties. With these two qualitative constraints, one can demonstrate in simulation studies that the resulting learning machine creates self-organizing maps in which units with similar response properties cluster together and more frequent input sensory patterns are detected by more hidden units. These two properties of this simple neural network are called “topological organization” and “cortical magnification” and are intrinsically characteristic of mammalian brains. Thus, this simple neural network illustrates that these two simple learning rule features which might be common to a wide range of neural network architectures are sufficient to account for these important qualitative features of mammalian brains.

Hopefully today’s show provided you with a new perspective on “what is a neural network” as well as “what is a hot dog?”. So the next time someone comments on a “neural network” or offers you a “hot dog”, hopefully you will think twice!!!

Thanks for your participation in today’s show!

If you are a member of the Learning Machines 101 community, please update your user profile.

You can update your user profile when you receive the email newsletter by simply clicking on the: “Let us know what you want to hear” link!

Or if you are not a member of the Learning Machines 101 community, when you join the community by visiting our website at: www.learningmachines101.com you will have the opportunity to update your user profile at that time.

Also check out the Statistical Machine Learning Forum on LinkedIn and Twitter at “lm101talk”.

From time to time, I will review the profiles of members of the Learning Machines 101 community and do my best to talk about topics of interest to the members of this group!

Further Reading and Videos:

“Human-level control through deep reinforcement learning” (Nature Paper—not technical)

NIPS 2013 paper ATARI Video Game Learning Machine (technical version of Nature Paper)

Videos of ATARI Deep Learning Neural Network Learning to Play Video Games

“pong” ,“seaquest” , “breakout” and “space invaders”.

Related Episodes of Learning Machines 101:

Episode 2,Episode 25, Episode 15, Episode 20, Episode 23, and Episode 29.

The Blue Brain Project. Check out the show notes for the website and the Blue Brain Video Introduction.

Wikipedia Article on Q-Learning and the original Q-Learning paper by Watkins and Dayan (1992)

McCulloch-Pitts Formal Neural Network

Hartline Biologically Realistic Linear Neural Network (Nobel Prize Lecture)